Named Entity Recognition

Overview

The goal of this tutorial is to use the SliceX AI Trainer to train a sequence labelling model that finds entities in a sentence. Once training is completed, we will use the model for prediction.

Dataset Processing

For this NER tutorial, we will be using our Sequence Labeling API on the publicly available Annotated Corpus for Named Entity Recognition (hosted here on Kaggle by developers Abhishek Thakur et al). This dataset is sampled from the open source GMB (Groningen Meaning Bank) text corpus, and contains a set of 8 entity types that could be of multi-domain interest. There are 47959 samples in the dataset, with a total word count of 1354149.

We will start off by extracting the NER tags and massaging the corpus into the requisite BIO tagging format for our Sequence Labeling API (review the dataset format requirements in the Dataset Requirements section). The raw untagged sample tokens must be passed in a .TXT file and the corresponding BIO tags for each token in a separate .TXT file. We will also need to extract the set of entity tags in this dataset, and map them to label IDs in a label_id.json file.

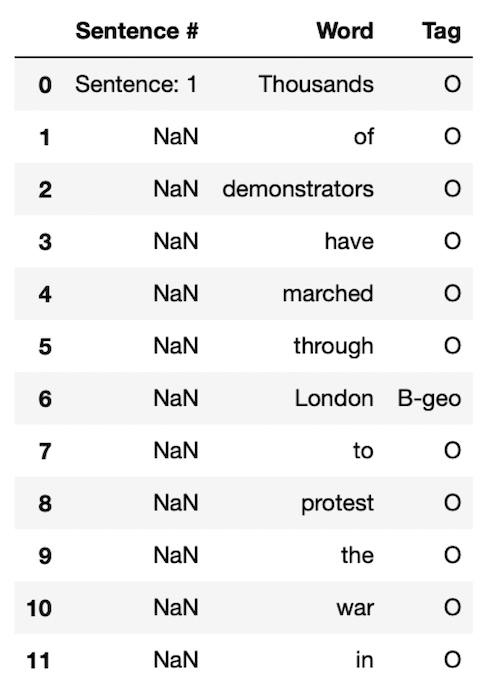

Unpacking the data, you will see that the dataset lists the sentence labels, tokens and tags row-wise:

Take note of the encoding option you are using. The default codec for Python 3 (utf-8) threw encoding errors when used out of the box, due to presence of non-ASCII characters in the dataset. As a quick fix, we first explicitly re-encode our data in ASCII, which is now utf-8 friendly. Note that pesky hanging spaces and bad formatting make some of the samples problematic. We will end up with different numbers of tokens and tags for these samples, and our training job won’t run. For this dataset, there are only a few such samples- so we will drop them! As a handy tip- make sure to check that this requirement is met when you are formatting your own dataset.

Here is a sample code that can help with generating the requisite files for both the train and validation splits:

import pandas as pd

from sklearn.model_selection import train_test_split

import os

import json

#Loading Kaggle data with pandas

df=pd.read_csv('ner_dataset.csv',sep=',',encoding='unicode_escape').drop(columns=['POS'])

df.fillna(method='ffill',inplace=True)

#Extracting set of BIO entity tags

entities = set(pd.Series(df['Tag']).unique())

entities_dict = {}

index = 0

for tag in entities:

entities_dict[str(index)] = tag

index += 1

with open('label_id.json', 'w') as f:

json.dump(entities_dict, f)

#Grouping row-wise tokens and tags into sentences

df['Word']= df.groupby(['Sentence #'])['Word'].transform(lambda x : ' '.join(x))

df['Tag']= df.groupby(['Sentence #'])['Tag'].transform(lambda x : ' '.join(x))

df.drop_duplicates('Sentence #',inplace=True)

#Dropping bad samples

for i in df.index:

if len(df['Tag'][i].split())!=len(df['Word'][i].split()):

df.drop(i,inplace=True)

#Writing train, val split data files to subdirectories

parent=str(os.getcwd())

os.mkdir(os.path.join(parent, "train"))

os.mkdir(os.path.join(parent, "val"))

train, val = train_test_split(df, test_size=0.2)

train['Word'].to_csv('train/sentences.txt', sep='\t', header=None, index=False)

val['Word'].to_csv('val/sentences.txt', sep='\t', header=None, index=False)

train['Tag'].to_csv('train/tags.txt', sep='\t', header=None, index=False)

val['Tag'].to_csv('val/tags.txt', sep='\t', header=None, index=False)

This is what the label_id.json file should look like:

{

"0": "I-org",

"1": "I-art",

"2": "B-tim",

"3": "I-tim",

"4": "O",

"5": "B-geo",

"6": "B-org",

"7": "B-art",

"8": "B-gpe",

"9": "I-nat",

"10": "B-per",

"11": "I-geo",

"12": "B-eve",

"13": "I-per",

"14": "I-gpe",

"15": "B-nat",

"16": "I-eve"

}

And here’s a training sample from train/sentences.txt with corresponding tags, from train/tags.txt:

Oil producers are also concerned that economic problems in the United States could depress demand for energy , which would push down the price of oil .

O O O O O O O O O O B-geo I-geo O O O O O O O O O O O O O O O

We now have all the necessary dataset bits and pieces for custom training. Zip them all up into a folder, and let’s get on to specifying the training configuration and launching custom training.

Custom Training

Start training

We will now use the SliceX Trainer CLI to post a request to the main training endpoint, passing a suitable JSON job customization with our request. Note that for an NER task, we want our model to be case sensitive. So under training_config , we will explicitly pass along the preprocessing field in our POST request- taking care to specify “lowercase": false in the arguments. We will turn off data_augmentation , turn on the use_pretrained option, and specify training parameters. Lastly, under model_config specify the API type and model family. Note that the model family for NER is Grapefruit. Let us see how we do on this task with the Grapefruit mini size.

We will run this custom training for 8 epochs. For the above specs, here is an example training request you can use ( setting model name to ner-gmb) :

curl -X 'POST' \

'https://api.slicex.ai/trainer/language/training-jobs' \

-H 'accept: application/json' \

-H 'x-api-key: API Key' \

-H 'Content-Type: application/json' \

-d '{

"training_config": {

"batch_size": 64,

"learning_rate": 0.001,

"num_epochs": 8,

"language": "english",

"data_augmentation": false,

"use_pretrained": true

},

"model_config": {

"name": "test-nergbm",

"type": "sequence-labeling",

"family": "Grapefruit",

"size": "mini",

"preprocessing": {

"lowercase": false

}

},

"dataset_url": "DATASET_URL"

}'

You should get a response that looks like this:

{"id":"XYZ"}

This is your MODEL_ID. Make sure to note it. You will now be using it for monitoring and custom inference. For us it is XYZ.

Monitoring the training job

You can now monitor your training job and check performance stats with a GET request to the following endpoint. You will need your MODEL_ID to retrieve logs for the right job:

curl -X 'GET' \

'https://api.slicex.ai/trainer/language/training-jobs/XYZ' \

-H 'accept: application/json' \

-H 'x-api-key: API Key' \

-H 'Content-Type: application/json'

Here is an example response you will see when the job has completed:

{"data":{"model":{"id":"XYZ","app_id":"APIKey","name":"test-nergbm","training_status":"READY","type":"sequence-labeling","modality":"language","created":"2022-08-05T22:25:24.540233+00:00"},"training_stats":{"batch_size":64,"main_eval_metric":"f1","best_eval_metric_value":0.8086,"epoch":8,"metrics":{"elapsed_time":[117.36523008346558,117.15978121757507,118.45917224884033,118.10859775543213,117.04211449623108,117.15716671943665,116.94353413581848,120.03696990013123],"training":{"loss":[0.47139031956593197,0.17295168173809847,0.14545568250119686,0.13169810272753238,0.12158211844662825,0.11657782812913259,0.11255556429425875,0.10910257587830226]},"validation":{"f1":[0.6555,0.7498,0.7786,0.7889,0.7926,0.7992,0.8039,0.8086]}}},"status":"READY","created":"2022-08-05T22:25:24.540233+00:00"}}

Custom inference

We are now ready to perform custom inference with our sequence labeling model! We will use the MODEL_ID to POST an inference query to the SliceX AI PredictorAPI endpoint. Here is an example request:

curl -X 'POST' \

'https://api.slicex.ai/predictor/language/model/MODEL_ID' \

-H 'accept: application/json' \

-H 'x-api-key: API Key' \

-H 'Content-Type: application/json' -d "{ \"query\": \"Calvin Klein's summer collection drops in Paris today! \"

}"

You should see a response like this:

{"data":{"labels":["per","geo"],"scores":[0.8107854723930359,0.9786347150802612],"tokens":["Calvin Klein's","Paris"],"token_start_positions":[0,6]},"metadata":{"model_inference_time_ms":48.192138671875}}

As we can see, the labeler correctly identified the named entities and their corresponding spans and tags.

Your custom sequence labeler is now ready to use!